4 ways Computational Software is Transforming System Design & Hardware Design

by Anirudh Devgan, President & CEO, Cadence Design Systems

I. Introduction: System & Hardware Design Strides & Challenges

Electronics systems are changing at an accelerating pace, with technologies such as hyperscale data centers, smart devices, 5G communications, building and home automation, self-driving cars and health care enabled by remarkable advances in:

- System design – which defines and combines all the elements of a complete system, spanning the implementation and verification of the PCB, 3D packaging, and integrated intelligent sensors, along with signal, electromagnetic, and electrothermal integrity analysis.

- Hardware design – which implements the underlying innovative system functionality, spanning the entire chip design and verification process from abstract modeling through tapeout.

As companies face fierce competitive challenges to deliver new, highly differentiated products even faster and more efficiently, their design flows must transform to meet these requirements:

- Extraordinary tool capacity needs due to the data explosion from increasing functionality & complexity, shrinking process technology, and multi-element systems packages

- Leveraging of inexpensive compute servers to scale performance on demand

- Increased need for co-optimization due to the increased impact of multi-physics effects at advanced nodes and greater densities as well as companies differentiating through increased customization.

- Tightening resource availability mandating higher productivity & ROI

To keep pace with these drivers, the computational software underlying the system and hardware design development platforms has been undergoing dramatic and accelerated technology advances.

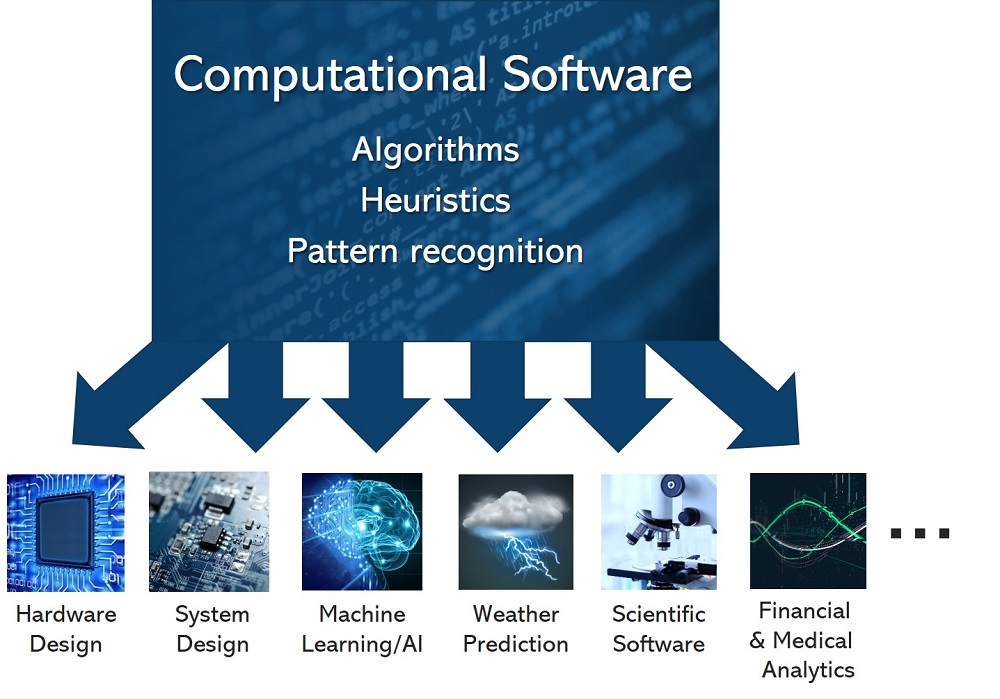

II. What is Computational Software?

Computational software refers to a software classification comprised of complex algorithms and sophisticated numerical analysis for heuristics and pattern recognition processes.

Today’s computational software applications span numerous industries, including semiconductors, systems, weather prediction, scientific software, and financial, medical, and business analytics. It is also used heavily in today’s AI and Machine Learning algorithms.

Below are a few examples of computational software:

- Algorithms: Solvers (matrix, SAT, SMT…), decision diagrams, generators, predictive scheduling, checkers, adaptive meshing, matrix solving, computational fluid dynamics, clustering, element methods…

- Heuristics: Randomization strategies, simulations, inference engines, graph methods, probability estimation, sampling…

- Pattern recognition: Classification, regression, macro modeling, optimization trees…

Not all software is considered “Computational Software”. For example, it does not include using Java for a graphical user interface API.

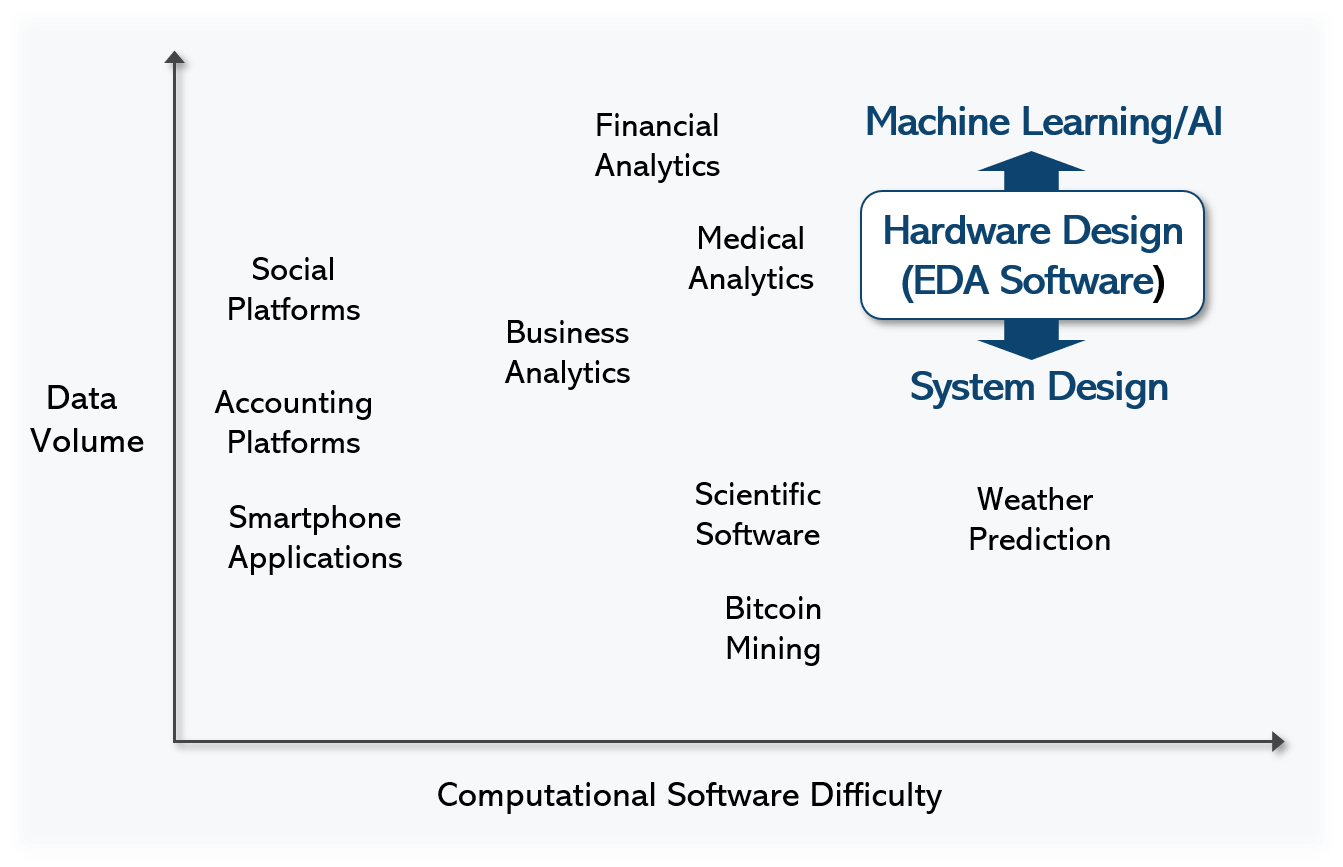

III. EDA Industry Computational Software Driving Breakthroughs in Adjacent Areas

Computational software has been the DNA of the electronic design automation (EDA) software industry for multiple decades, delivering major capability and productivity advances in hardware design. The EDA software industry has uniquely mastered multiple aspects:

- Applying highly tuned algorithms across diverse applications

- Developing algorithms that can operate on enormous data volumes

- Splitting computation for massively parallel execution

Multiple industries now require computations that support hundreds of thousands to millions of elements; this a level the EDA industry conquered one to two decades ago, now supporting designs with billions of elements.

The complex computational software, which has driven advances in efficiency, product quality, and compute power advances for hardware design, has recently enabled new breakthroughs in two adjacent areas: System design and hardware design.

Below are four areas where today’s computational software advances are transforming both system design and hardware design.

IV. Four Ways Computational Software Is Transforming System & Hardware Design

1. Breaking Capacity/Performance Scaling Barriers – Highly Distributed Compute

System and hardware design flow capacity levels must scale quickly to accommodate the data explosion from smaller process nodes, multi-physics analysis across multi-fabric designs, and the need to test systems across operating conditions.

Advanced computational software has successfully responded to hardware design’s intense capacity and performance demands via highly distributed compute methods that efficiently leverage on-premise and cloud compute servers.

However, system design tools had lagged, such as for the 3D solvers used to create highly accurate models for the signal, power, electromagnetic, and thermal integrity analysis performed while designing critical interconnects for PCBs, packaging, and 2.5/3D ICs.

These 3D solvers utilize sophisticated computational software elements, such as finite element analysis, adaptive meshing, matrix solving, and computational fluid dynamics. Unfortunately, their capacity/performance limitations created project slowdowns. Additionally, many designers were forced to hand partition large structures into smaller pieces to analyze in a reasonable time – creating accuracy risks in cases where the partitions were signal transmission weak points.

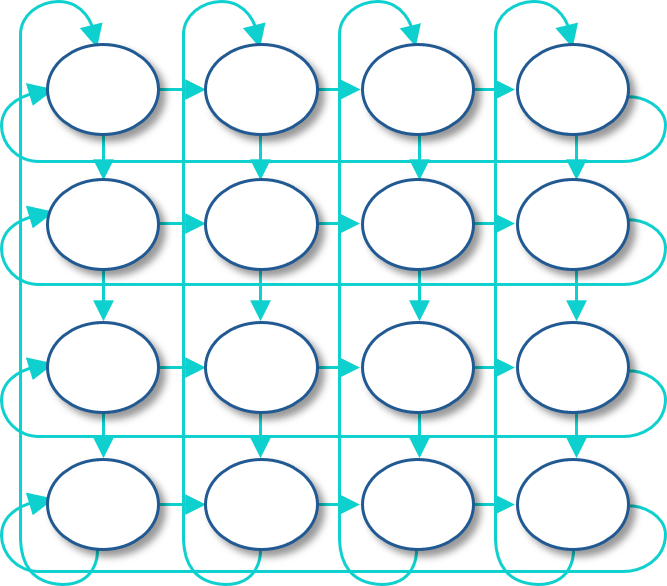

Computational Software Impact: EDA’s most advanced computational software methods for distributed compute for hardware design were deployed to address this system design gap –splitting the 3D solver compute using a massively parallelized, distributed matrix solver.

Very large sparse matrices were partitioned across cores, with each sub-matrix solved separately — while maintaining a precise communication balance between the different cores to maintain close to linear scaling. Layers of overlap between the interdependent pieces reconciled the compute back into the whole with full accuracy.

The high-value result was a ten-fold 3D solver performance gain (e.g. from 20 hours to 2 hours) with virtually unlimited capacity, while preserving the integrity of analysis to retain “golden” accuracy levels.

2. Server Farm Cost Reduction – Small Memory Footprint

As discussed above, today’s advanced system and hardware design software now utilizes multiple CPU cores for compute – and even multiple servers.

However, distributed compute can require the entire design image to be copied to each distribute machine, which can result in design teams spending tens of thousands of dollars each week for the larger machines.

So, the memory footprint for each parallel compute process becomes critical.

Computational Software Impact: For the system design 3D solver discussed above, computational software for highly distributed compute was able to reduce the memory footprint by 5 to 10X, e.g. from >100GB/machine to only 10-20 GB/machine. The same reduction has been achieved for IR drop analysis and static timing analysis for hardware design.

The smaller memory footprint means design teams can deploy server farms – on-premise or in the cloud — with smaller, cheaper machines. This dramatic cost savings makes it affordable to utilize larger compute server farms to achieve even faster turnaround.

3. Co-Optimization & Superior Quality of Results – Native Integration

There are many degrees of inter-tool integration for the highly iterative system and hardware design flows. More shallow integration levels were suitable for earlier process nodes. Designers could use margining and then wait until late stage sign-off to focus their time on ECO changes to sufficiently improve their performance, power, and area (PPA) to meet product specifications.

At smaller process nodes, for certain phases of design, engineers no longer have this luxury. To achieve the targeted quality of results, multi-physics effects such as crosstalk and electromagnetic effects must now be measured and understood during implementation rather than waiting for the sign-off stage.

Computational Software Impact: Co-optimization is needed when interdependent elements are analyzed simultaneously to achieve an optimal quality of results. A key enabler of co-optimization is the sophisticated computational software underlying “native integration”, the deepest integration level.

Native integration requires a binary-level integration with a single executable file – a single program optimized for a specific task. One example of this is with digital hardware design, where designers can now push a button, and the static timing analysis, power analysis, and place and route steps will be computed together. This automated co-optimization reduces design flow iterations, leading to improved quality of results.

System design is also moving toward co-optimization of multi-physics analysis such as power integrity, thermal integrity, and inductance during system design implementation, such as is for 5G applications.

4. Higher Productivity & ROI – Machine Learning

Resources are extremely tight, even in the face of increasing design complexities. The process of exploring and iterating across numerous tools to create, optimize, and verify designs for target specifications is highly manual and takes many weeks to months. The number of variables to consider for multimillion and billions of elements too vast for humans to absorb to make optimal decisions.

Computational Software Impact: There is tremendous excitement around the early results and upside potential for machine learning to achieve greater resource efficiency and improve ROI by augmenting system and hardware design optimization and verification flows.

Deploying machine learning requires deep computational software expertise: algorithms, pattern recognition, and heuristics that use judgments and deductive reasoning based on experience. Thus, effective machine learning deployments require domain knowledge.

At the design flow level, one example is the difficulty in predicting what a sub-circuit will look like a few steps down the line; a large number of iterations are required to converge. To overcome this obstacle, one successful ML application uses experiments to train a model to predict the delay following various hardware design implementation steps. It then uses the delay model to make better decisions.

The early results have been terrific. With proper user guidance, the machine learning technology has successfully raised productivity by at least 2X, bringing a much higher ROI for the software, hardware, and engineering resources deployed.

V. Conclusion: Expanding Computational Software Applications

Dramatic advances in computational software are enabling companies to meet fierce competitive challenges by transforming system design and hardware design flows: 1) Breaking existing tool flow capacity barriers; 2) Lowering the cost of on-demand compute power; 3) Improving product quality; and 4) Raising the productivity and ROI.

Continuing computational software breakthroughs – in algorithms and numerical analysis for heuristics, and pattern recognition – can also transform other sectors, such as thermal flows, scientific analysis, and much more.

ABOUT THE AUTHOR

Anirudh Devgan, President & CEO of Cadence.

Anirudh Devgan has served as President & CEO of Cadence since December 2021. He previously served as President of the company from 2017 to 2021, overseeing all business groups, research and development, sales, field engineering and customer support, strategy, marketing, mergers and acquisitions, business development, and IT.

Prior to Cadence, he held executive positions at Magma Design Automation, and at IBM, where he received the IBM Outstanding Innovation Award. Devgan received a Bachelor of Technology degree in electrical engineering from the Indian Institute of Technology, Delhi, and MS and PhD degrees in electrical and computer engineering from Carnegie Mellon University. He is an IEEE fellow and holds numerous patents.